Creative Bot Bulletin #4

By Judith van Stegeren

A NOTE FROM THE EDITOR

Hi everyone! It's summer. I was expecting the pace of new AI developments to slow down a bit as everyone goes off on vacation, but it's almost as if the opposite is true. It has been a busy two months! We've been talking to a lot of AI startup founders here in the Netherlands, to get to know our local startup eco-system a bit better. I've also had fun evaluating new LLM tools, libraries, and products. I've become more active on Mastodon and found a few good new machine learning newsletters. These help me make sense of the flood of information that is flying around on the web. I hope this newsletter helps you a bit in the same way. Cheers!

—Judith

Featured

For some tasks, GPT models get worse over time

Some people online observed that GPT-4 seems to get worse over time. A team of researchers from Stanford and Berkeley has now drawn the same conclusion with a structured testing approach.

They tested the March 2023 and June 2023 versions of GPT-3.5 and GPT-4 on math problems, sensitive question answering, prompt injection, code generation, and visual reasoning. Especially the math problems and code generation got significantly worse. This problem is known as "model drift" or "model performance shift".

To its users, GPT-4 seems to be the same model in March 2023 and June 2023, but this evaluation shows that the model changes over time. How and why exactly it changes is not always apparent. It is understandable that OpenAI makes changes to a model to improve its usability for specific tasks. But an improvement for one task might very well lead to a performance decrease for another. This is especially bothersome when GPT is part of a pipeline, such as the ML backend of an app.

Based on their findings, the researchers recommend that companies that use LLMs start implementing model performance drift monitoring, and I fully agree.

Paper on arXiv: How is ChatGPT's behavior changing over time? by Chen et al.

Generative AI

LLMs on the command line

I regularly test new products that incorporate LLMs, and also libraries and tools for developing (with) LLMs. One of the things I came across was a set of command line tools for working with large language models, written by Simon Willison (Datasette). It started out with support for openAI API models, but later it was expanded to local models and models on machine learning platform Replicate as well. The tools support UNIX pipes, and as a person who prefers to work with plain text data as much as possible, I love this. So you can combine it with other tools like grep, curl, and jq for superfast querying.

Here's a (very bad) example poem I generated with it:

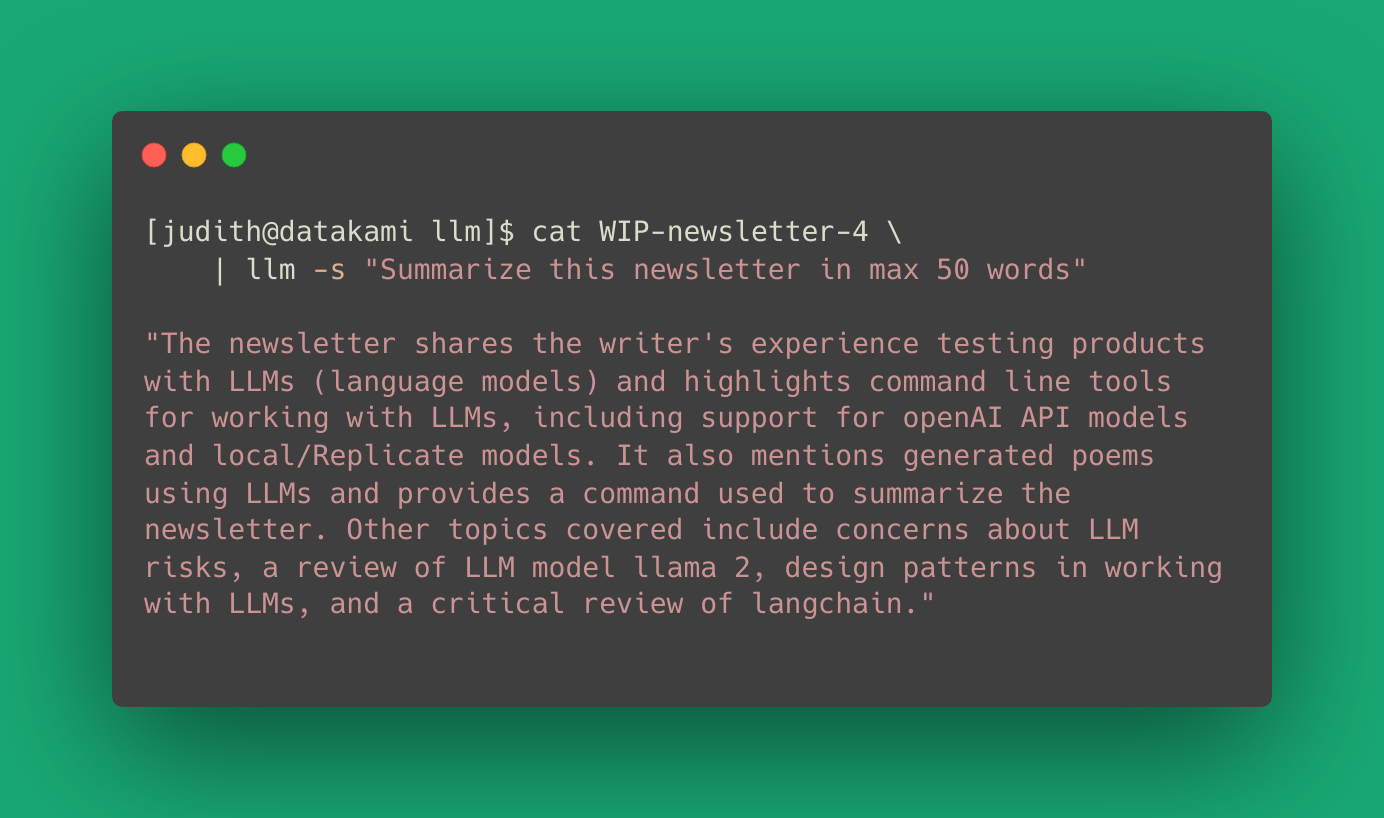

Here's the command I used to summarize this very newsletter, while I was writing it.

Review of Llama-2

Zvi Mowshowitz wrote an extensive review of open-source language model Llama-2.

Zvi's blog is great for the comprehensive summaries of developments in the LLM field. This article puts Llama-2 in context and highlights some caveats. It's also worth reading for the links to other resources.

Usage patterns of LLMs

Now that more people have been working with LLM for some time, there are slowly emerging some design and usage patterns. Fancy names for common usage patterns are nothing new: researchers and practitioners coin new names for these things all the time, but the ones below seem to stick, as I came across them several times in the past months:

- Chain of Thought prompting: asking the language model to generate an answer and explain the reasoning behind it. Some people find that generating the reasoning first can help the model with generating a better answer subsequently.

- ReAct pattern: combine reasoning and acting by interleaving chain-of-thought generation and action plan generation.

- Retrieval-augmented generation: when prompting, also query a database to get extra context (examples, extra information) for the prompt, and feed the results to the generator to get higher quality outputs.

Review of langchain

Max Woolf, from gpt-2-simple fame, wrote a very readable critical review of langchain: The problem with langchain.

Risks of LLMs

ML engineers Mickey Beurskens and Carlo Lepelaars have written a long-form write-up about the risks of LLMs, mostly from an information security and social perspective. I've seen multiple blogposts touch upon these topics, but this is the first overview post I've seen. I'm sure we will see more like this in the future.

Video Games

INSIDE

Indie game INSIDE delivers an intriguing narrative, despite the absence of any text or dialogue. It combines elements of science fiction, horror, and thriller genres, with occasional blood-curdling moments. INSIDE is from Playdead, the developer that also created Limbo. The game is a puzzle/platformer, but luckily it's rather forgiving for casual gamers (like me). The puzzles never got in the way of experiencing the story. Horrific, creepy fun for people who like dystopian fiction, minimalist cell-shaded graphics, Limbo, Rain World, and Shaun Tan's Arrival.

Datakami news

New website

Datakami has a new website! We've made sure you can find previous editions of the Creative Bot Bulletin there, and we have a new blog!

New Datakami blogposts

- Overview of open and commercially usable LLMs

- Industry dynamics in Generative AI

PyData Amsterdam in September

Yorick and I will be at PyData Amsterdam from September 14 to September 16. We'll be hosting a tutorial on using llama-index, a Python library for building and querying custom indices. I am looking forward to meeting up with everyone again in real life. Drop us a line if you're also attending and would like to meet up!

More information about the conference: https://amsterdam.pydata.org/

Schedule: https://amsterdam2023.pydata.org/cfp/schedule/

Datakami on TV

Dutch children's tv programme Klaas Kan Alles contacted us again, this time to invite us to be on-camera! Judith will be featured in the AI-themed episode to give some background information on ChatGPT. We filmed the episode in the beautiful public library of Vught, which is located in a repurposed church. The episode will probably be aired in October or November 2023.

More like this

Subscribe to our newsletter "Creative Bot Bulletin" to receive more of our writing in your inbox. We only write articles that we would like to read ourselves.