Creative Bot Bulletin #5

By Yorick van Pelt

A NOTE FROM THE EDITOR

Hi everyone. Time for another issue of our "monthly" newsletter,

slightly delayed by some holidays and two conferences. To compensate,

I selected the best content from the past two months.

---Yorick

Featured

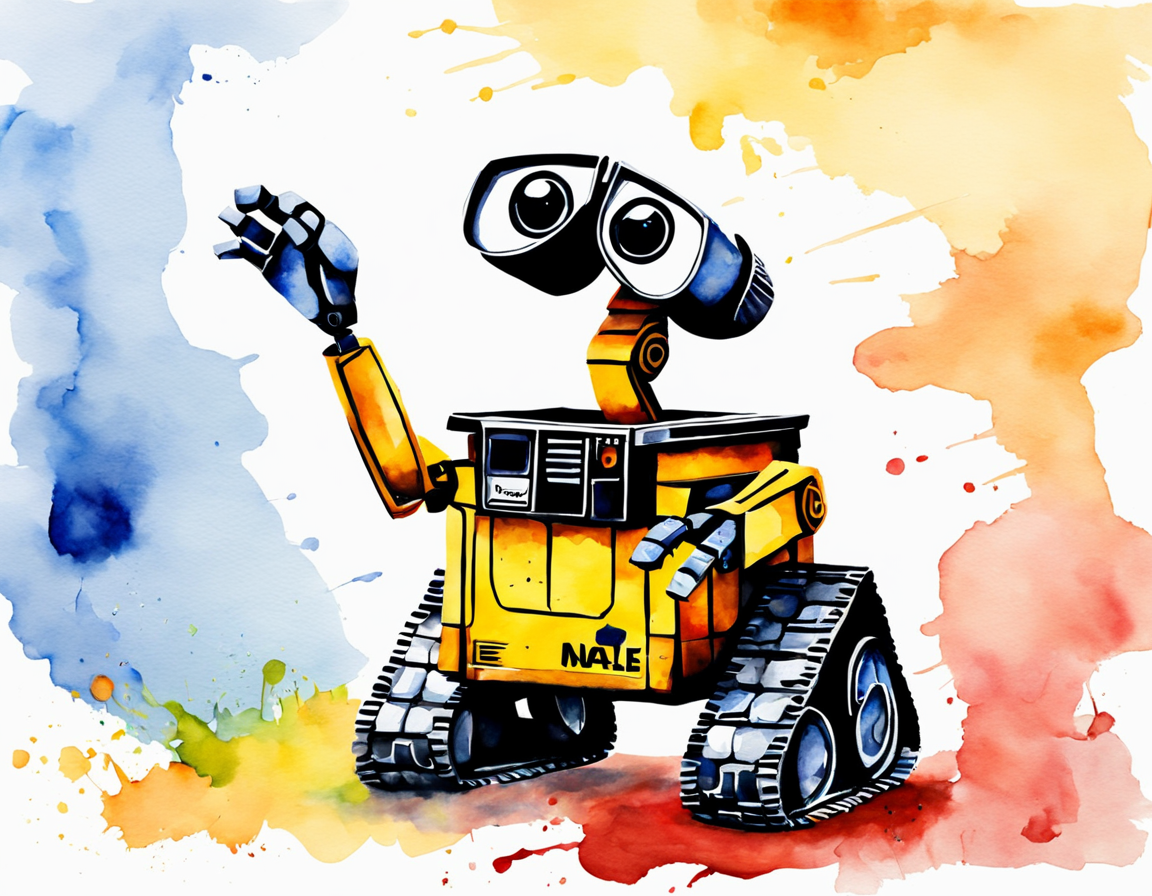

(Pictured: WALL-E in watercolour style by SDXL)

SDXL and Fooocus

A new version of Stable Diffusion, SDXL, was released on July 26th. I

finally got around to trying it out using the Fooocus

GUI by

ControlNet author Lvmin

Zhang. This UI is full of tricks that minimize the amounts of knobs that

you have to touch to get good results, so you can focus on writing the

text prompts. Above: "Wall-E" in the watercolour style. As you can

see, it can almost do text, which the older versions were terrible at.

For now, Fooocus only does the basics (but very well). For more advanced

usage (inpainting, finetuning, controlnet), I recommend

ComfyUI.

Generative AI

Gemini

Google has been training models (called Gemini) that are rumored to beat GPT-4, and will release them sometime near the end of this year. There was a popular article by the blog SemiAnalysis about the growing divide between the companies with enough GPUs and the rest of the world focusing on optimizing their code to run on scarce hardware.

The prediction markets aren't convinced that Gemini will beat GPT4 this year, but it seems likely that they'll keep at it and beat OpenAI next year.

Other uses than language

One of the papers that caught my attention is "Transformers are Universal Predictors", in which the researchers fed other things than language into Large Language Models to see if they could work with that. One of the things they tried was balancing an inverse pendulum, and it seems to work. My conclusion is that LLMs might be a viable approach to predicting arbitrary time-series data.

Multiple agents working together

Something that's old by now (May 2023!) is the paper Generative Agents:

Interactive Simulacra of Human

Behavior.

Researchers simulated an entire village of people using LLMs, which

certainly isn't cheap to run, but provided some very interesting

results.

More recently, work on collaboration between multiple LLMs has picked

up, a good summary can be found in last month's MetaGPT

paper.

This also prompted me to look more into the AI Agent ecosystem, but I'm just getting started there. Stay tuned for more!

Video Games

Rain World

From wikipedia: This is a survival platform video game from 2017.

From me: The cool part about this game is that all the creatures are

simulated at all times and continue to exist in the world even when

they're not on screen. They're driven by a learning AI that follows

simple rules, rather than scripted actions, and turns it into complex

behavior.

Datakami news

Yorick's going fulltime

Great news! I started working on Datakami full-time on September 1st.

I'm curious to see what can be done with twice as much time.

Blog post: A first look at Claude

Judith published a

review of

Anthropic's Claude on our blog, comparing it against GPT-4 and

highlighting some of its capabilities.

Mastodon presence

Judith has been trying out and talking about some recent software

projects on Mastodon, follow her at

@jd7h@fosstodon.org.

Llama-Index tutorial at PyData Amsterdam

Judith and I hosted a

tutorial on using

llama-index at PyData Amsterdam, a Python library for building and

querying custom indices. Materials are available on

GitHub. Let

us know if there are any other workshop topics you would like to see

from us at future events!

Lecture at Oyfo on October 2nd

Judith will be giving a

lecture

on AI at the Oyfo Technical museum in Hengelo on October 2nd. Come by if

you're in the area!

Thank you for reading! If you enjoyed this newsletter, consider forwarding it to a friend. Follow us on LinkedIn for more frequent updates.

More like this

Subscribe to our newsletter "Creative Bot Bulletin" to receive more of our writing in your inbox. We only write articles that we would like to read ourselves.