Creative Bot Bulletin #7

By Alexander de Ranitz

A NOTE FROM THE EDITOR

Hello there! Welcome to another Datakami newsletter! As feels nearly unavoidable nowadays, there have been some big releases of new and improved models in the past few weeks, such as Llama 3 and Stable Diffusion 3. Besides looking at those, I have been enjoying reading about unexpected applications of transformers: from high-energy physics experiments to playing chess. Read on to find out more about that. Anyhow, I hope you enjoy reading this newsletter and see you next time!

—Alexander

Featured: Stable Diffusion 3

Stability.ai just released its newest Stable Diffusion model: SD3. The biggest improvement in SD3 seems to be text generation. SD3 uses a new Multimodal Diffusion Transformer (MMDiT) architecture that uses different weights for image and text representations and allows text and image tokens to influence each other. As SD3 itself puts it, this is the result:

(Prompt: Beautiful pixel art of a Wizard with hovering text 'Achievement unlocked: Diffusion models can spell now.')

Forbes Deep Dive into Stability AI

How Stability AI’s Founder Tanked His Billion-Dollar Startup. This Forbes article dives into Stability AI and how it went from a flourishing start-up to a financial disaster under the leadership of its now ex-CEO Emad Mostaque. This is a great example of how a lack of a coherent strategy can hold back a startup with great technology.

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

This new paper by Google proposes a modified attention mechanism for transformers to allow them to process infinitely long inputs while using bounded computation and memory. To do so, they combine regular local attention with a compressive memory which stores information about the entire context history. According to the authors, this will help LLMs to reason, plan, and understand longer context.

Meta Releases Llama 3

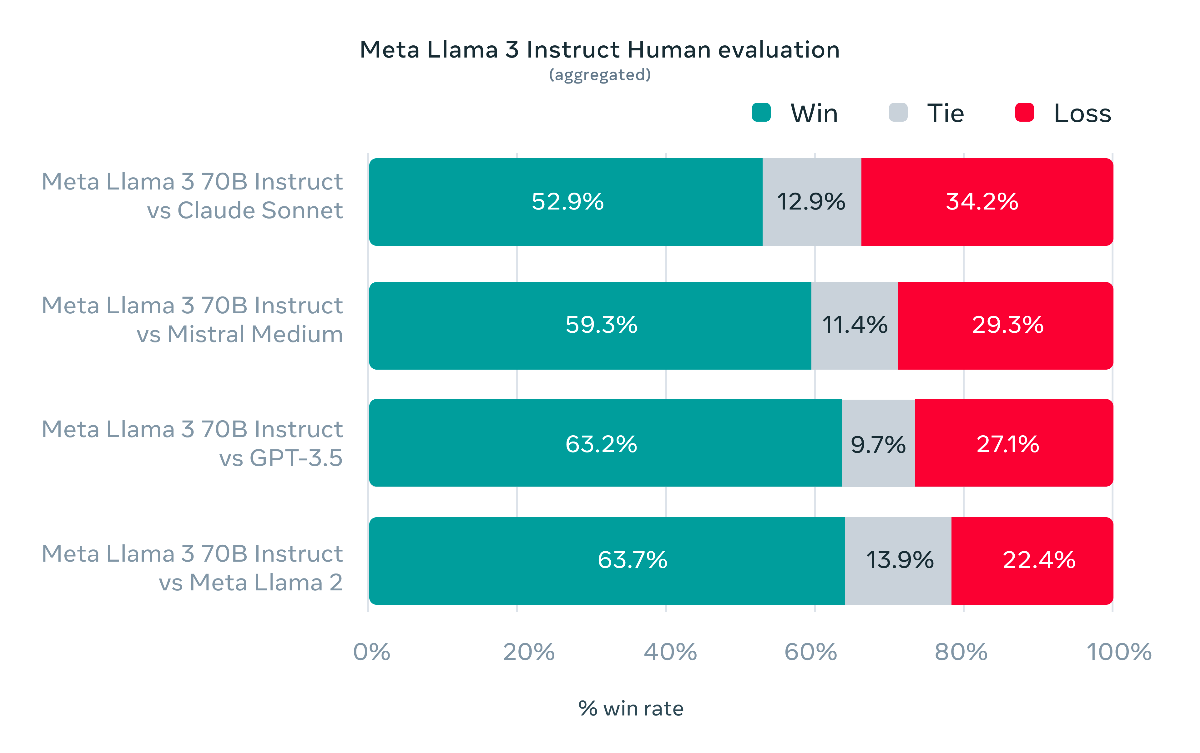

On April 18, Meta released its newest iteration of their Llama large language model. For now, Llama 3 is available in 8B and 70B parameter variants, but Meta aims to release a 400B version soon. According to Meta’s experiments, Llama 3 punches above its weight compared to other similarly sized models, as seen below:

Video game: Helldivers 2

Judith’s game of the month is Helldivers 2, a cooperative third-person shooter that's an absolute blast to play with a “squad” of up to 4 people. Even if none of your friends play this game, the matchmaking system makes it easy to team up with random strangers. The online co-op culture is incredibly friendly and welcoming, with higher-level players often lending a hand to newcomers.

The game pits you against two types of foes: the "bots" inspired by the Terminator and Star Wars droids, and the swarming "bugs" reminiscent of the aliens from Starship Troopers or the Zerg from StarCraft. Accidentally killing your teammates is inevitable and part of the fun – you might know creator Arrowhead Game Studios from the chaotic co-op game Magicka.

What really sets Helldivers 2 apart is its outstanding sense of humor, with satirical flavor text reminiscent of Paul Verhoeven's Starship Troopers film. The game is brimming with tongue-in-cheek jokes that have spawned an active community of meme-creators on platforms like Reddit. To get a taste of this irreverent flavor, just watch the game's intro movie – “undivided attention is mandatory” and “not watching it is treason”.

Datakami news

Pictured: Andre Foeken giving a presentation about (generative) AI at Nedap

AI Diner Pensant at Nedap

Datakami was present at the second AI Diner Pensant, an event organized by AI-hub Oost-Nederland, the Dutch AI Coalition, Oost NL, and Nedap. The event brought together a prominent group of people from AI-focused companies, knowledge institutes, start-ups, regional companies, and local hubs to to talk about the opportunities and threats of AI, and the steps the Netherlands should take to remain at the forefront of this technology. The findings from the dinner discussions will be turned into a recommendation document for the Dutch government, and you might spot Judith in the aftermovie of the event.

More like this

Subscribe to our newsletter "Creative Bot Bulletin" to receive more of our writing in your inbox. We only write articles that we would like to read ourselves.