Creative Bot Bulletin #11

By Alexander de Ranitz

A NOTE FROM THE EDITOR

Good day! I hope you're doing well and welcome back to Datakami's Creative Bot Bulletin. This month, we highlight how generative AI is also changing the game in the field of robotics, showcasing two autonomous robots use pre-trained vision-language models. Besides this, we touch upon some rumours about disappointing results, new investments, and how positional encoding works. I hope you enjoy your read and until next time!

—Alexander

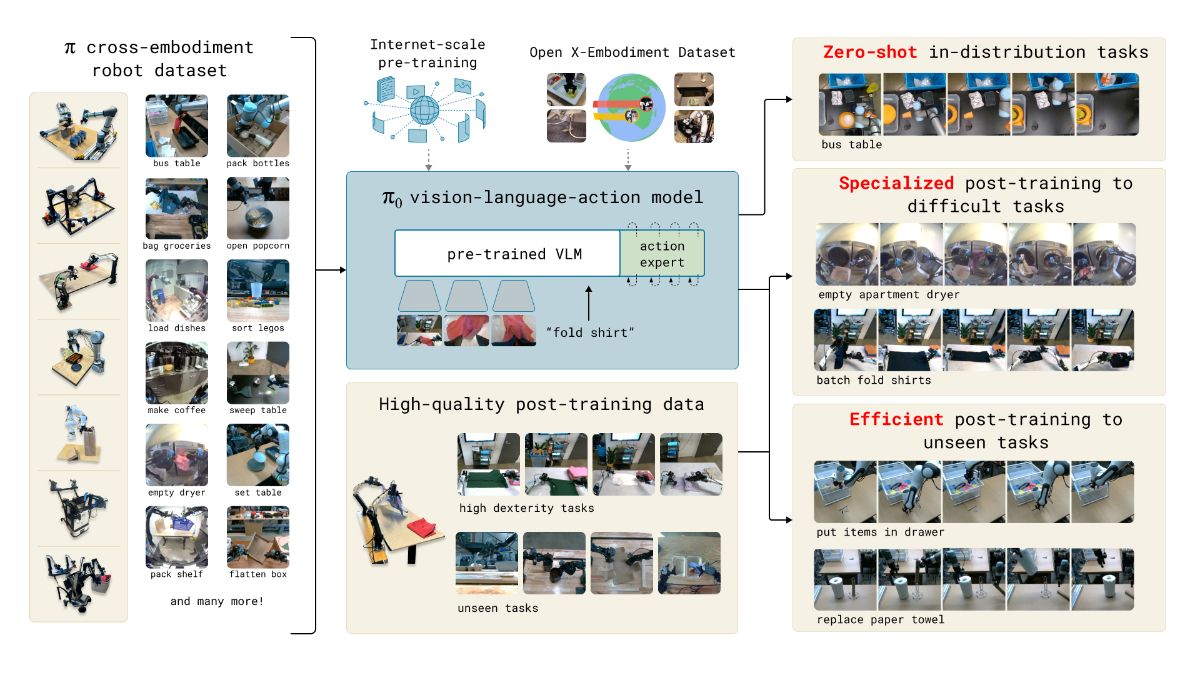

Featured: π0, a General-Purpose Robot Foundation Model

Physical Intelligence has recently released their first “general-purpose robot foundation model”. This AI model, called π0, is trained to control various robots to do a wide range of tasks in complex real-world environments. By using a pre-trained multi-modal LLM, the robots can understand images and natural language, allowing users to easily interact with the robots. Check out their post to find out more and to see some of their demonstrations.

Helping Spot See Using Foundation Models

Boston Dynamics' Spot robot needs to sense and understand its environment to move around safely and quickly. Previously, this was largely done using depth cameras that allow Spot to form a 3D map of its surroundings. However, this information is not always enough to spot small objects or determine whether something is slippery, fragile, or movable. Therefore, an open-set object detector was incorporated into Spot, giving it a better semantic understanding of its surroundings and allowing it to more effectively move around. For more info, check out this demo video or this blog.

LLM Scaling Slowing Down?

Rumours abound that AI companies are facing disappointing results from their newest generation of models. Several sources told Reuters and Bloomberg that simply increasing model size and compute is not delivering the expected improvements anymore, breaking from the LLM scaling "laws". Companies are also struggling to find more high-quality data for training. This combination means that alternative ways of improving models, such as tool use and increasing test-time compute, are becoming increasingly popular.

Amazon Invests another $4B In Anthropic AI

Amazon has announced that it will invest another 4 billion dollars in Anthropic AI, the creator of the Claude AI models. Anthropic was already using AWS as its cloud provider, but following this deal, they will also be training and running their models on Amazon’s hardware. As such, part of this investment will flow right back into Amazon, and they can collaborate with Anthropic to further improve their Trainium and Inferentia chips.

You Could Have Designed SOTA Positional Encoding

By default, the attention mechanism used in LLMs is permutation invariant: the order of tokens does not matter. But because the order of words is obviously semantically meaningful, positional encoding is used to add positional information to the LLM input tokens. Most modern LLMs use Rotary Positional Encoding (RoPE) for this. In this blog, Chris Fleetwood walks you through iteratively designing this algorithm based on a few requirements and intuitions. This is a great way to both understand what RoPE does, and why it does so. If you want to dive deeper into positional encoding, also check out this paper on how RoPE (doesn’t) work in practice.

Datakami news

NixCon presentation

Yorick presented at NixCon 2024 in Berlin. A recording of his talk Deploying random AI models from pip nightmare to dream2nix is now available on YouTube.

Deploying random AI models: from pip nightmare to dream2nix

Or: how I stopped worrying and learned to love docker imagesHow can we take existing python software, and turn it into a docker image? Can we use the power of Nix? Can we make it easy?

I'll tell you all about my new half-finished project, where I smash dream2nix and docker-tools together to deploy other people's AI models to the cloud in a reproducible manner.

Along the way, we'll have brief detours through flake-parts, docker internals and nvidia software.

Holiday wishes

This is the last newsletter of 2024.

At Datakami, we wish you happy holidays and we'll see you in 2025!

More like this

Subscribe to our newsletter "Creative Bot Bulletin" to receive more of our writing in your inbox. We only write articles that we would like to read ourselves.